Most people on Reddit might not even be people

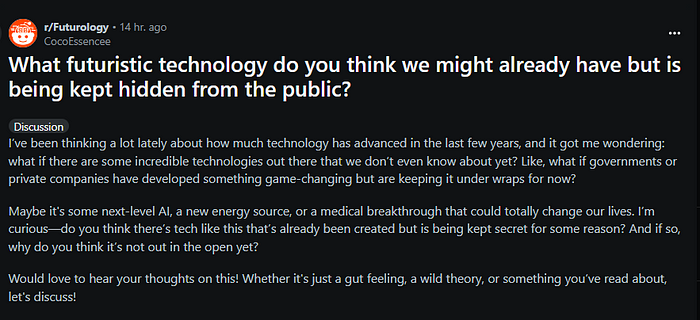

There is something very, very wrong in the above post. And no, it’s not the obvious timeless scam being perpetrated here by this person named Naya. Can you see it?

Ready for it? Naya is not a real person. In fact, this post was not written by any person at all. It was entirely generated by ChatGPT. You can try DMing this account, and you will get very, very human replies. “She” posts everyday, and also comments on posts by others.

Only, there’s no person on the other end. It’s an automated bot. Read on to know more.

Entering the rabbit hole

I sometimes spend time on penpalling platforms when I’m bored, trying to strike up a conversation with similar interests. July 13 was no different for me. While scrolling through a subreddit, I saw a post made by an account “u[/]innclosure”. It was very similar to the post you saw earlier. I knew obviously it was a scam.

But curiosity got the better of me. Firstly, the post was much better worded than most scam posts you see. Secondly, the account looks very active on other subreddits, even posting about stuff unrelated to “Hey I wanna meet you”.

So I decided to DM the account. I wanted to get an idea on how the scammer(s) operates. What followed blew my mind!

A sophisticated scam

A very humane chat

One of the best ways to see the sophistication of a scam is to first express distrust in the scammer, then express a slight desire to buy what they’re selling. That way you get a better coverage of their tactics — how they pursuade, how much they pursuade, and how they reel in their targets, followed by the actual scam. Plus, it gets them to drop their defenses because they start believing that they’ve gotten you.

I start off the conversation very humanely, building upon “our” shared interests in music. After a few minutes of the conversation, “she” shills her “OnlyF[a]ns” account. After initially rejecting her requests to sign up on her page, I agree, and she shares the page. And then I go silent for 2 days.

Notice how even without me replying anything after that, she texts me on each day to follow up.

I then reply on the 3rd day. I restart my initial way of refusing followed by accepting her requests. Observe how her T-shirt in the photograph has changed, indicating it’s from a different set of photos.

Uncovering the bot

Thing is, even at this stage, the idea had not occured to me that I was not chatting with a person but a bot. But then it hit me. I was going through her posts, and they read way too perfect. It was the same information, but conveyed in a different way in each post. “Could it be that these are just ChatGPT variations of the same text?”.

So I copied one of her posts, went to ZeroGPT, and tested the text.

Yup. It’s fully generated by ChatGPT. But detection tools like these can have false positives. So I try another post of hers. And another. And another. All the same. All by ChatGPT. And it’s been going on for more than a year.

Breaking the bot

At this point, I wanted to know if someone else had looked into this. So I began looking. I did not have to look far. It seems that a few people on r/ChatGPT have begun noticing this scam.

It’s not just that the posts are written by ChatGPT. The DMs are too. It’s an all-automated bot.

All the while, there was just one thing running around my mind — “how many people on Reddit are not even people, just bots?”. So I decided to go a step further and find out.

War against the bots

I decided to write a bot that would periodically scan a few subreddits and detect AI-generated posts (yes, I used a bot to fight against bots). On detected posts, it would comment the detection result, making other redditors aware that it might be a bot, and hence a scam.

It took me a few days to get things right; reddit API isn’t exactly built for fast-scraping, and I got impatient. Multi-threading did not sit exactly with the API, so I had to fine-tune my bot to process posts as fast as it can without being suspended by Reddit.

Finally I was done, and it was time to actually start.

I kept my bot running for days on my Raspberry Pi. Every hour it would scan around 10 subreddits, 500 latest posts. Around 10% of it would be detected as AI-written. And my bot would then proceed to comment this result under the detected post.

And Reddit would keep suspending my bot account. Apparently, writing just one comment every 10 minutes is “Too many requests”. Each time my bot account would be suspended, I would make another and continue.

In the end, it was too much for me. At this rate, I would have to create 10 accounts a day just to keep the detection running. And this goes against my principles. After all, my accounts are also bots.

After 3 days of running my bot, I decided to stop. This was not the right way to spread awareness and detect the scammers. Instead, I decided to write this post, hoping to get some attention into this.

How the scam works

Having gathered some information on how these scam bots operate, here’s me putting my observations.

Capabilities of the bots

- Not just reply-driven: The bots don’t operate with a “reply only when replied” model. They actively maitain a list of people they have been talking to, compare the timestamps of last interaction, and if above a certain threshold, they will follow up on their own.

- Pictures are context driven: The bots know which pictures to use when. On the first day, each picture I got from the bot had the “girl” wear a black T shirt. And on the third day, each picture had a white T shirt. This adds realism to the otherwise bot-generated texts.

Escalation of the scam

One thing to note from the posts is that there is always just one location mentioned — Madrid, Spain. It’s possible that only one location was chosen so that the account looks legitimate.

But it’s also possible, judging from the nature of the posts, that this account wants to scam people at a very particular location. I can’t ascertain if the scam escalates to entice people with the promise of a meeting.

If such is the case, we could be looking at a far more dangerous situation — kidnapping, human trafficking, you name it.

Signs to lookout for

If you happen upon an account and doubt whether it’s a bot, here’s some ways to detect it.

- All posts made at the same hour: Looking at the profile of the bot, it can be seen that it posts multiple similar posts across tens of subreddits all within the same hour, then goes dormant for a few days before starting the cycle all over again.

- More posts than comments: Any normal person would have far more comments than posts. The reason is simple — it’s easier to reply on someone else’s content and create some content of your own. However, for the bot it was opposite. Posts outnumbered the comments.

- “Normal” content in the beginning: The bot accounts always start with making actual human interactions, and wait for a few weeks/months before using it for scams. This is called “aging an account”. This is done so that the account appears legitimate. Many platforms don’t let you interact with other members and communities unless your account is older than a certain threshold.

My final thoughts — the future of digital scams

The bots don’t attempt to do something novel. The scams perpetrated are timeless. From luring people to signup on websites with the promise of illegal goods and services, to straightforward asking for money by pretending to be financially struggling — the list is the same.

However, now these scams are going to exponentially skyrocket, because unlike before the AI boom when scams required real people to perpetrate them, now it just requires a carefully written script.

Running a hundred instances of the same script would instantly generate a hundred profiles. Running it on botnets and server farms would escalate this to thousands or millions of bots.

For all I know, a good proportion of accounts on all social media sites are already just bots, run by the social media companies themselves to drive up the engagement statistics to mislead their shareholders.

My intention with this post was twofold — to spread awareness, and to ask for help from the community to tackle this issue, because let’s face it, the corporations themselves would take no measures unless compelled. If you can take my project forward, and improve upon it, please reach out to me.

And for the rest of you, stay safe online. As a parting gift, I’ve this practise exercise for you. Try finding out if this post was written by a real person.

Here’s the link to my bot again, in case you wish to tinker with it or run it yourself.